Why Most Indie Games are 2D Games

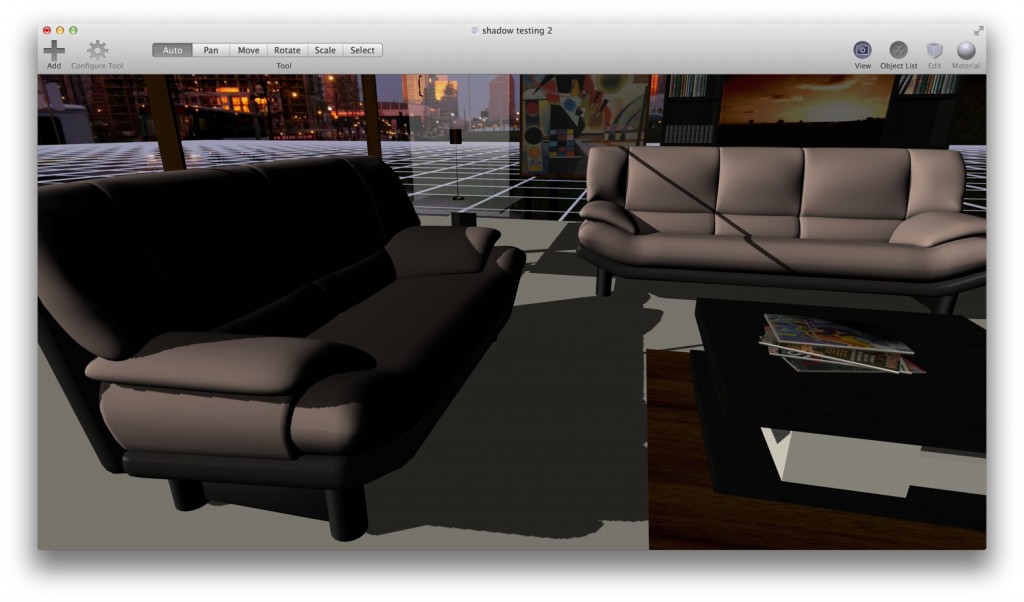

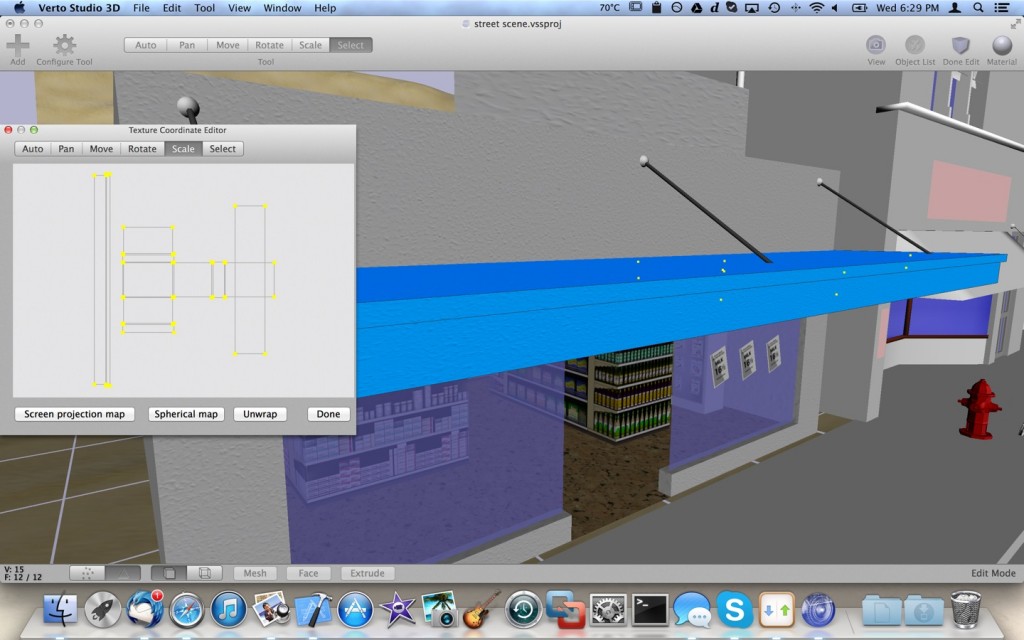

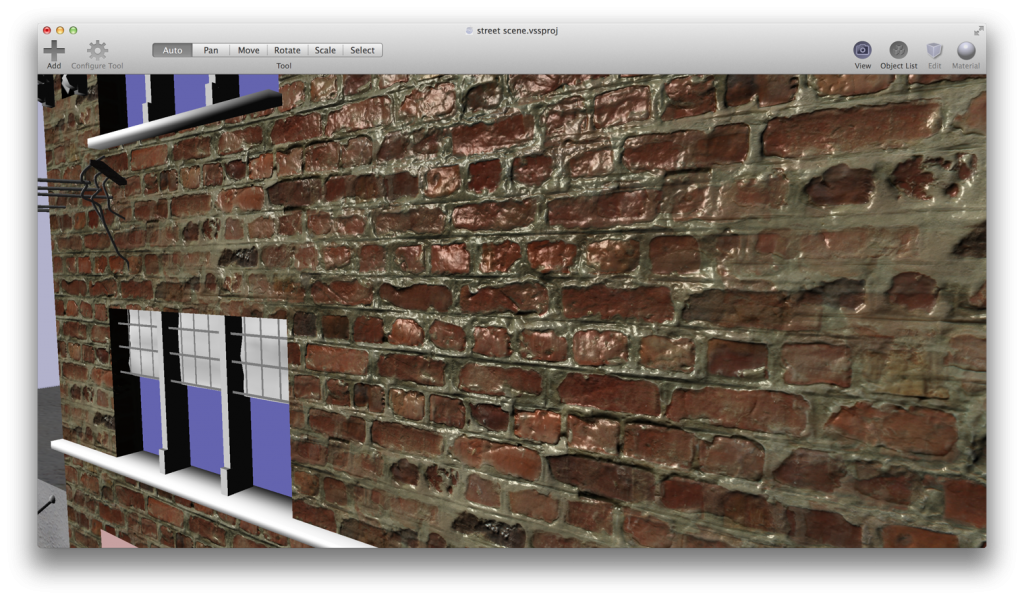

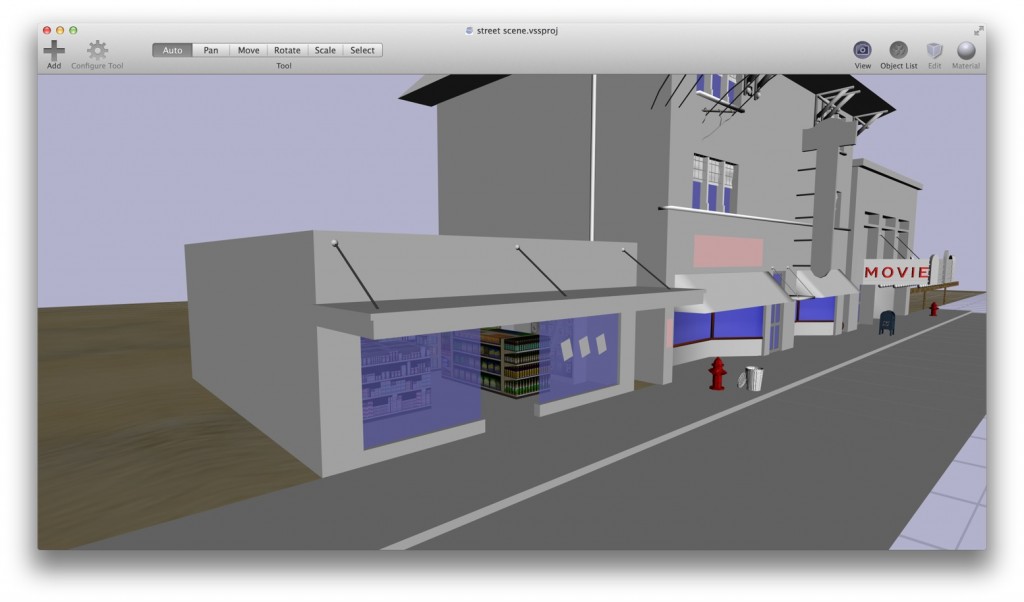

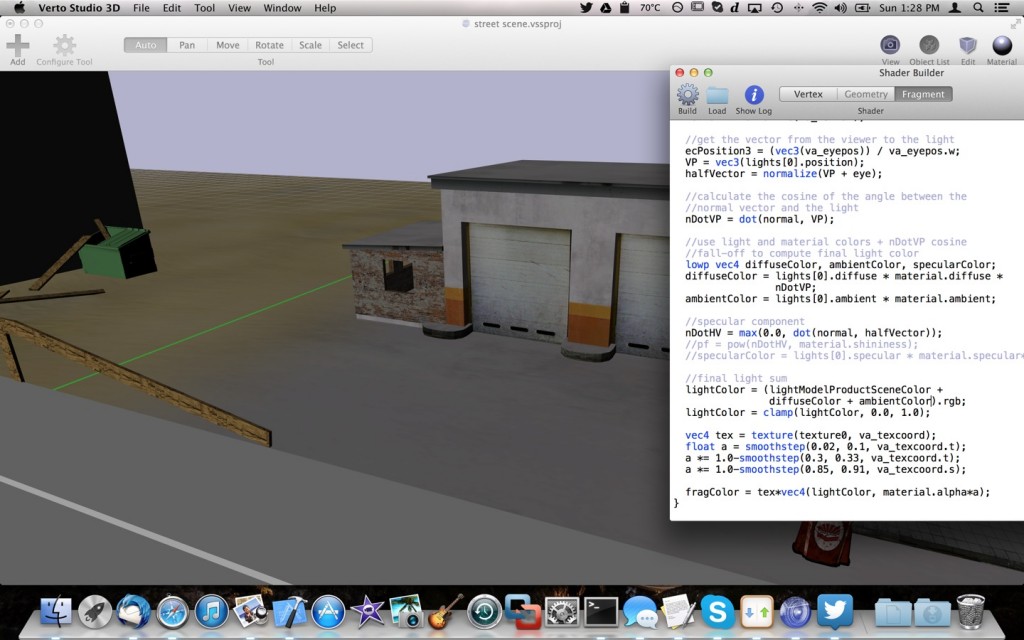

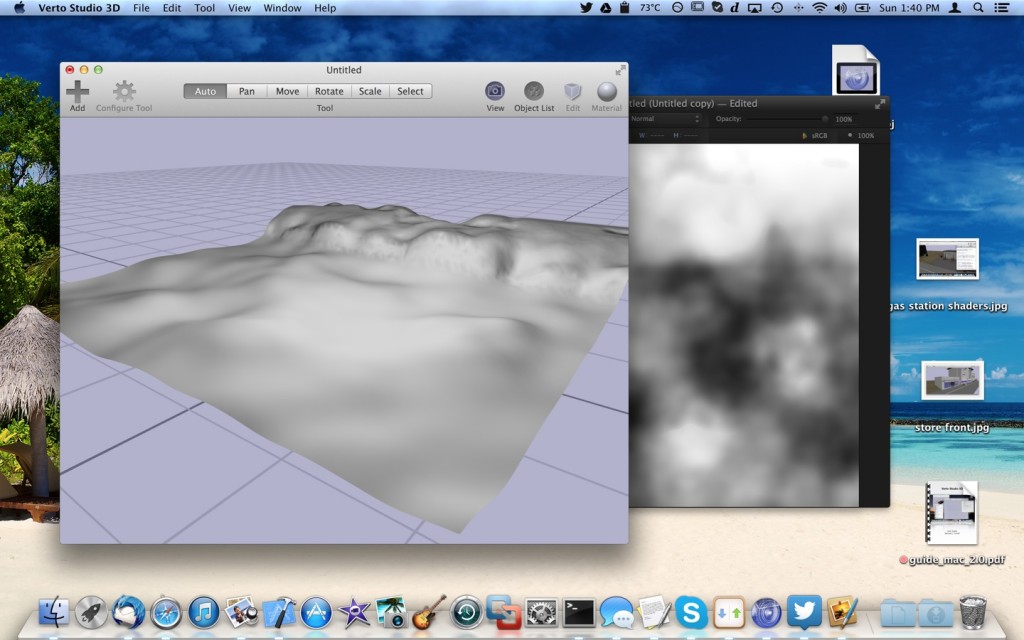

Okay so do you want to know why most indie games are in 2D? Read the posts from the last month or so in my blog. In summary, you’ll notice that 90% of all that work was asset creation. Keeping in mind, this is supposed to be a small-scale single-level 3D mini game. Artwork, modeling, texturing, more artwork, more texturing, more modeling.. etc. Simply stated, asset creation for 3D games is LONG, HARD work.. and in my case, I didn’t even do it all myself (I had a character modeler/animator help me with my single base character model, and I’ve relied quite a few times on turbosquid). Even still, compared to 2D game development, this process can be extremely time consuming. If this was a 2D platformer, most devs would have had at least basic assets for a “level 1″ finished probably in the first day, and would have been programming the actual game long before now. I’ve always tried to ignore this fact but its true. Look at the number of delays, setbacks, and ultimate release date shifts that have plagued recent AAA game projects project and you’ll see that rapid 3D game development is not an easy task even for seasoned developers.

Most independent game developers have certain skillsets that usually fall into the domains of programmer or artist. Now I know there’s stortywriting, sound effects, music, etc. But just about everything involved in a game’s development really splits into the two big umbrellas of either programming or creative (artwork, asset creation, etc). The problem here is that as one advances in their particular domain, and starts to get really good at one aspect of the field, they often have to leave the other side of things to somewhat wither. To become a really good programmer, I mean a really good programmer, you need to get obsessed with your craft. That means even if you were a decent artist, you probably won’t keep up your artistry skillset as much. The same goes for becoming a very good artist, and even a 3D artist.

As the industry has made the shift into large-scale theatric AAA games, more and more specialized craftsmen/craftswomen are needed to spend 8-hour days on something as simple as the texture of a claw of a single enemy in a single dungeon of a game. The sheer manpower that AAA studios can throw at a game allows for the large attention to detail that 3D games require to feel right. Even a “simple” game like mario 64 likely required dozens of artists to pan and pan over every keyframe of mario’s animations to make sure it was perfect. Now don’t get me wrong, this was also true of the SNES/Genesis 2D era. However, attention-to-detail, or lack thereof in these kind of games comes with less of a penalty, and certainly less of a time commitment.

It’s a hard pill to swallow since my dream of making games that started at a young age, has always predominantly been a dream of 3D game development. But you know what? I still love 3D game development. It’s supposed to be hard. The hard… is what makes it great. Tom Hanks is right.

So where does that leave the indie developer with respect to 3D game development? Well when you have a team of only 2-3 people (or in my case, 1 people), you CAN succeed at independent 3D game development. The absolutely crucial difference here is to understand and accept your limitations as an independent. For starters, no matter who you are, limit your freaking scope. Understand that you simply can’t make an AAA-length 3D game in any reasonable amount of time. If you’re 1 person, and a hobbyist with almost zero budget (like me), set a goal for a game that requires at most 1-2 3D environments with very few (if any) unique character models. If you are weak with art but you have the funds, consider using services like turbosquid to procure assets. If you are weak with 3D programming, consider (after buying a few books) using a 3D engine such as unity or unreal instead of building your own.

Above all, plan out the scope of your game to a t. Then, give yourself a pre-determined amount of time to finish the game project… and.. quadruple it.

Driveby Gangster Update – a Dive Into Swift

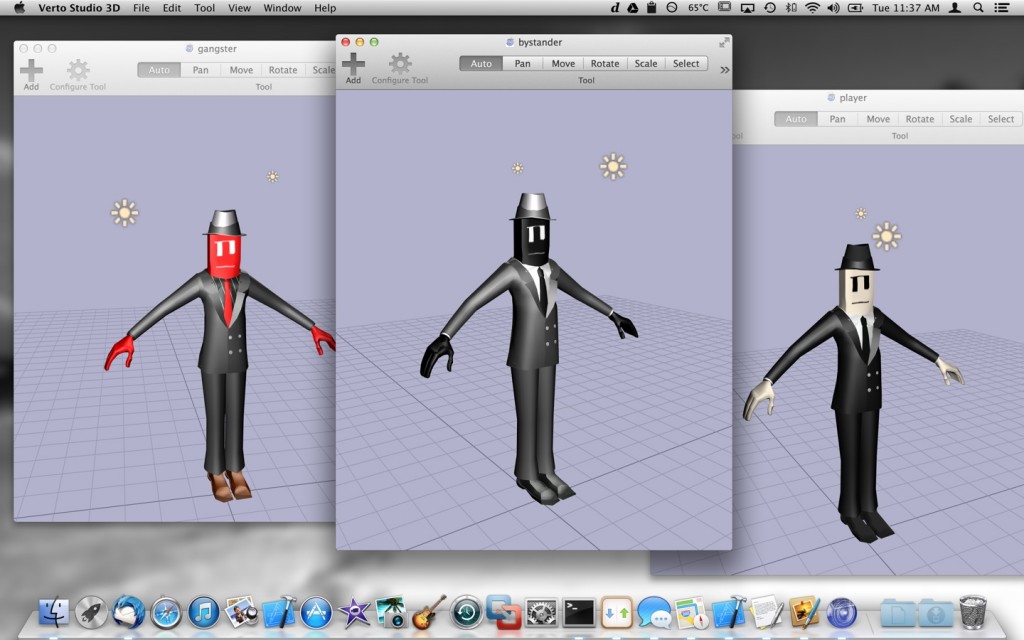

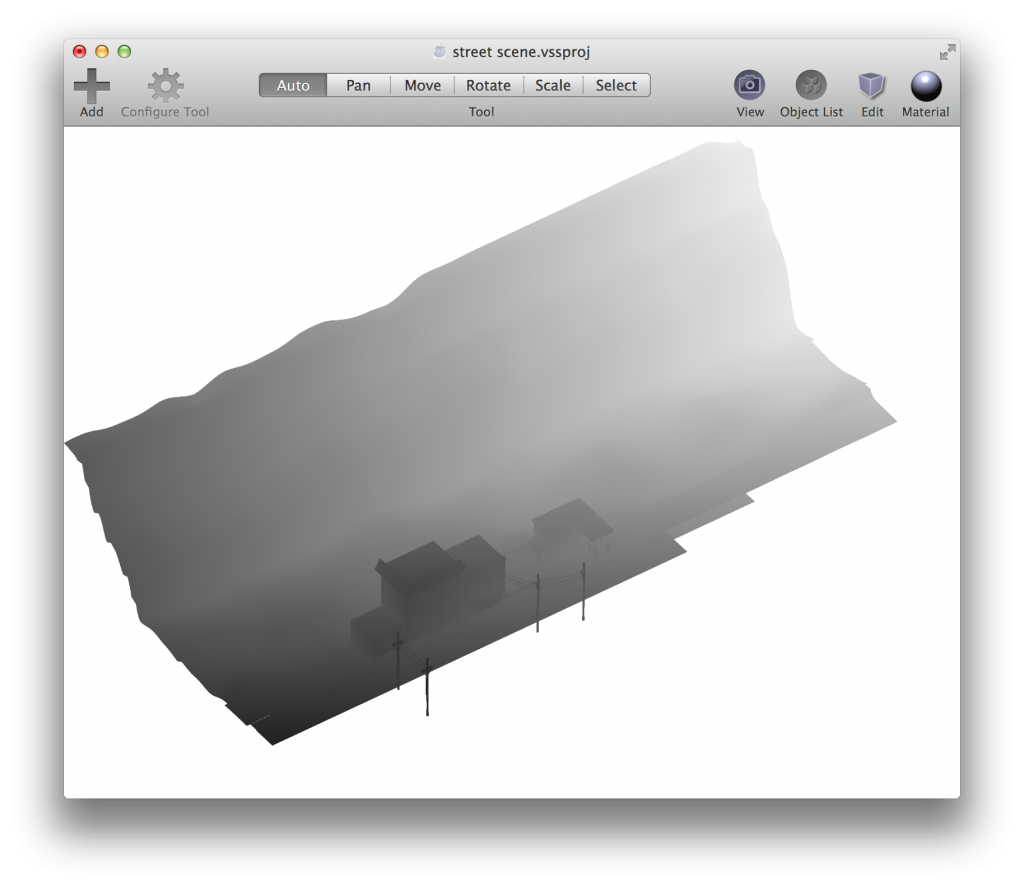

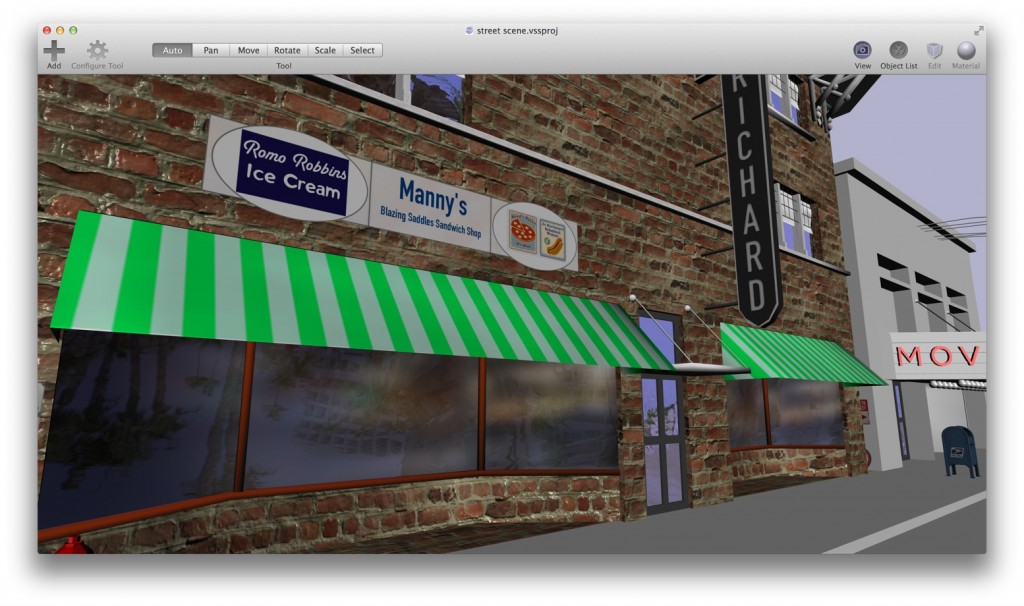

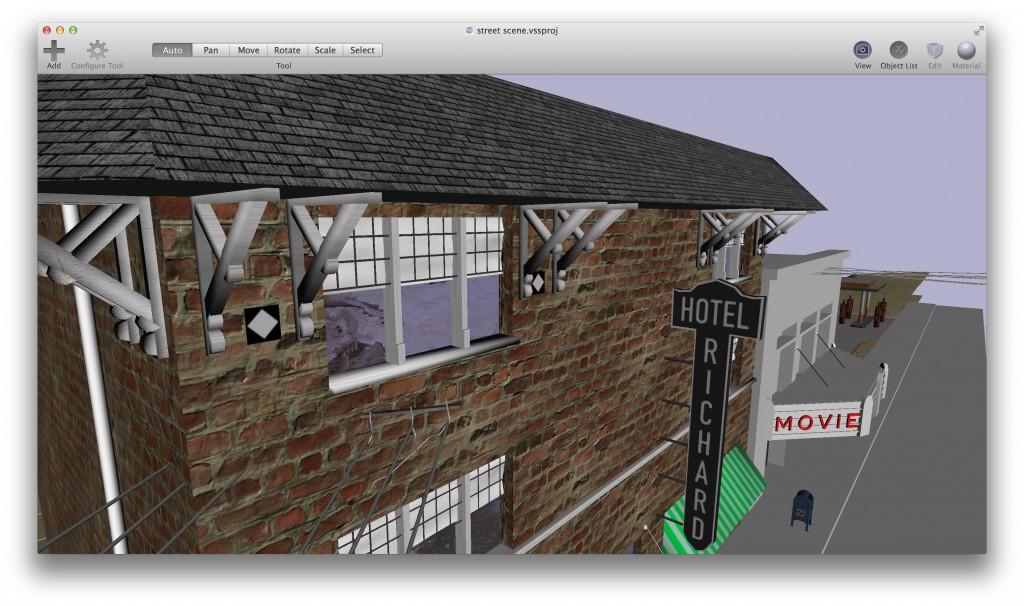

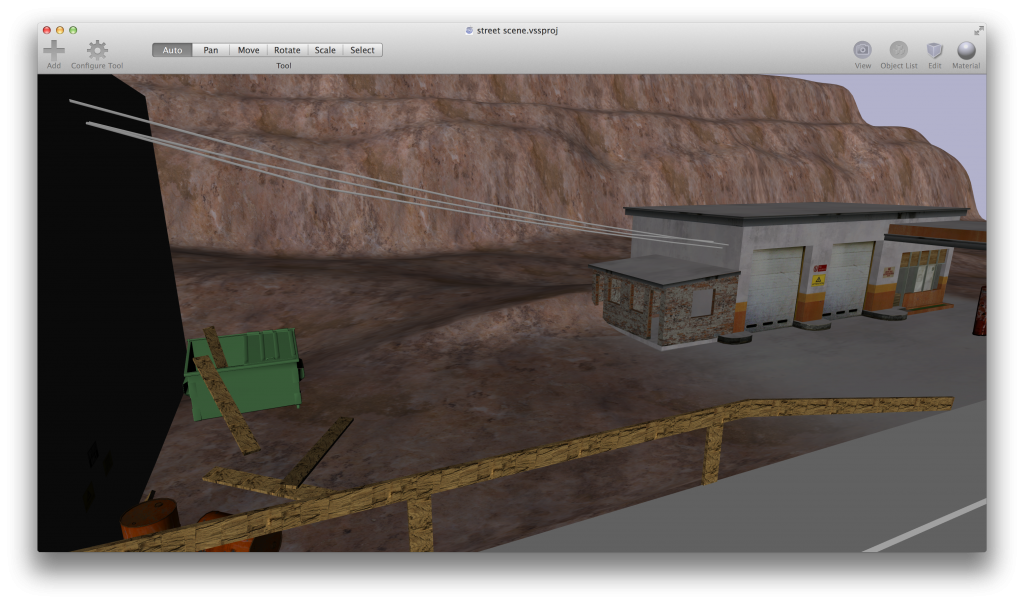

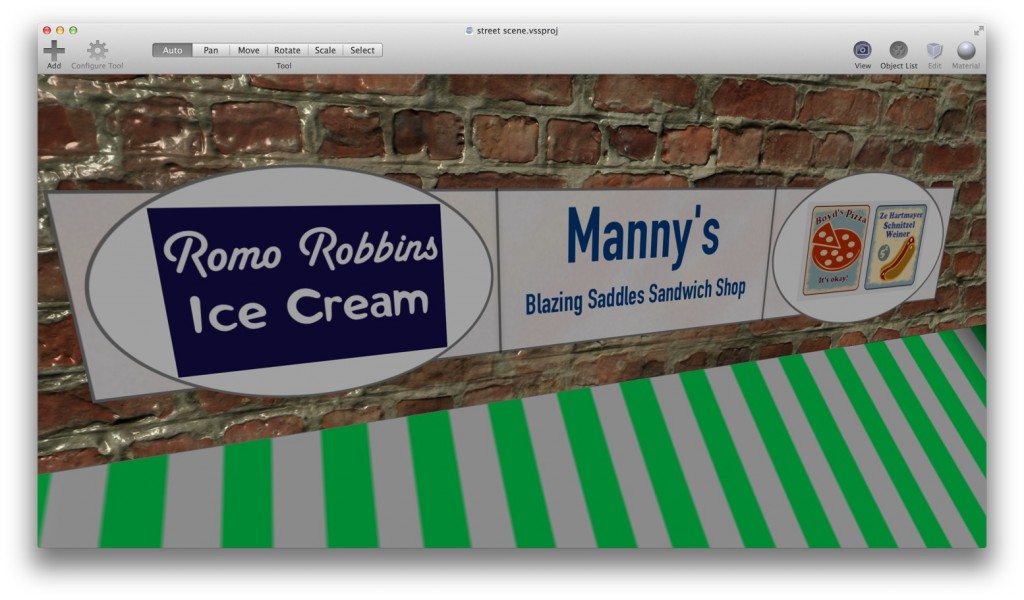

Over one month in to my planned “2 week” 3D game project, 90% of my assets are done! I still need a 3D tommy gun and an old-timey driveby gangster car. But I’m going to start actually programming (imagine that) the very simple AI that will drive the walking of most of the bystanders in the game. I’ve got my work cut out for me since most of the game logic code I’ve written to this point is all throwaway “get-it-to-work” POC code. I now need to organize and get a basic heirarchical game-architecture together. More fun, as I mentioned in my first post, I’ll be doing most of the game classes and logic in swift. Swift, despite being officially released, still has its problems. Namely for me, the speed of the IDE (for things like auto-completion) while typing in swift is the biggest gripe I have with the language so far. That problem is followed by a close-second of annoying cryptic compiler errors that have nothing to do with the underlying problem of “I’m expecting an Int32, but you gave me an Int”. Either way, I’m keeping my promise to write the game in swift as an experiment to see if the language is truly something I’ll want to leverage for game development in the future.