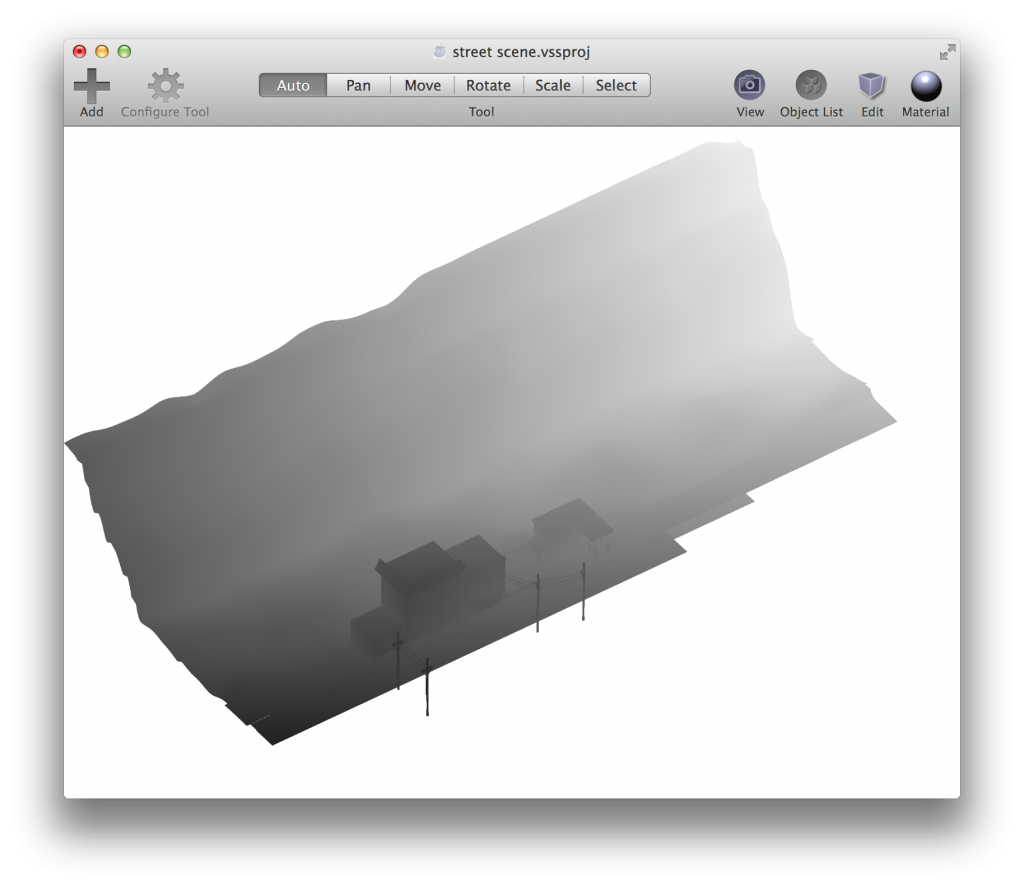

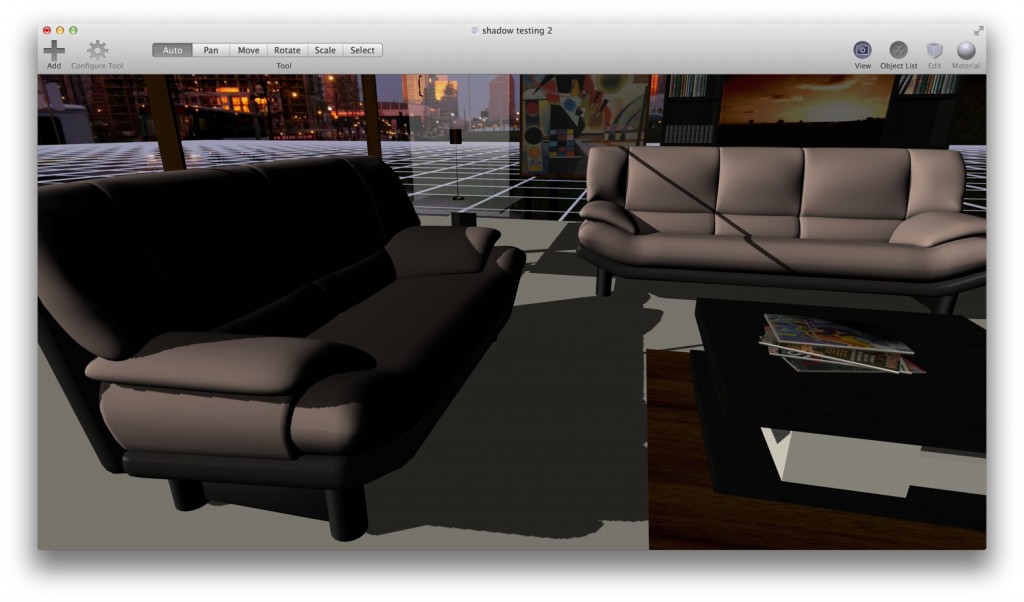

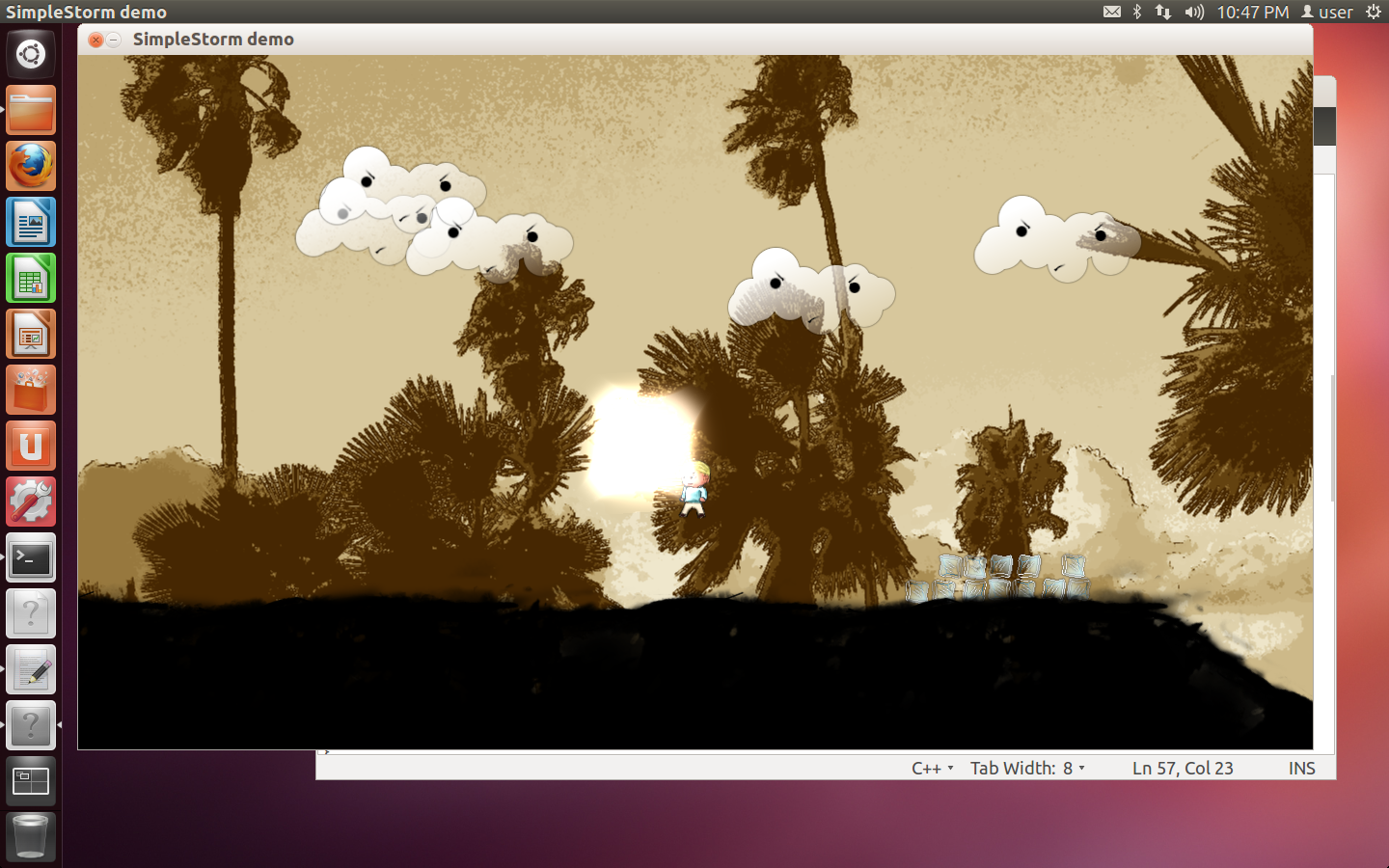

Yesterday was a lot of work. I worked really hard to obtain a higher frame rate for the game, because I know that my postprocessing effects will be costly. Sometime certain obvious things take a few days to sink in. It’s funny how I was looking everywhere to try to improve my fill-rate and bandwidth performance when I knew the problem was a polyon issue. Drawing the entire scene twice (once for lightmap/shadow pass and again for actual rendering) was really stupid. I was doing this because certain elements in the shadow maps such as the walking men were dynamic, thus the scene needed to be redrawn into the shadowmap every time. However, the mass majority of the polygons in the scene where static. The solution of this was to pre-render this static geometry into one shadow map, and render the dynamic geometry into a separate one, and then adapt all of my shaders to take an optional second shadow texture. This wasn’t easy since much of my architecture wasn’t designed for multiple shadowmaps per light. But I got it working.

The above boosted my framerates back into a very acceptable range so I’m happy. It’s a little annoying, that for some reason on OSX Yosemite, I can no longer turn off Vsync in a windowed mode. So I’ve been having to go fullscreen during debugging to see my max framerates which are hovering now well-over 100.

As far as swift goes, I’m now on the fully released Xcode 6.1 and I’m having far less problems with the source kit crashing which is great. The IDE is generally still a lot slower than its predecessor which I hope improves with time. as I’m writing more and more code in Swift, certain features are starting to really prove useful to me as I get used to the language. Among these are the tuples, willSet/didSet, and operator overloading. On the ObjC side, I’ve had to rely on this bulky MathVector class to do my vector maths. With swift I’ve been able to add all of the math operators I need directly to my low-level C structs via an extension. This has already saved me alot of work and made the math code that I’ve written for the game alot cleaner.

Here’s an example of the vector math extension code in swift

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 |

extension float3: Printable

{

static var zero: float3

{

return float3(x: 0, y: 0, z: 0)

}

init(_ x: Float, _ y: Float, _ z: Float)

{

self.x = x;

self.y = y;

self.z = z;

}

subscript(index: Int) -> Float

{

get

{

assert(index < 3 && index >= 0)

switch(index)

{

case 0:

return x

case 1:

return y

case 2:

return z

default:

break

}

return 0

}

set

{

assert(index < 3 && index >= 0)

switch(index)

{

case 0:

x = newValue

case 1:

y = newValue

case 2:

z = newValue

default:

break

}

}

}

var length: Float

{

return sqrtf(dot(self))

}

var lengthSquared: Float

{

return dot(self)

}

var xz: float2

{

return float2(x, z)

}

func normalized() -> float3

{

let len = self.length

return float3(x/len, y/len, z/len)

}

func dot(rhs: float3) -> Float

{

return x*rhs.x + y*rhs.y + z*rhs.z

}

func cross(rhs: float3) -> float3

{

var r = float3.zero

r.x = (y * rhs.z) - (z * rhs.y);

r.y = (z * rhs.x) - (x * rhs.z);

r.z = (x * rhs.y) - (y * rhs.x);

return r

}

public var description: String

{

return "(\(x), \(y), \(z))"

}

}

func + (lhs: float3, rhs: float3) -> float3

{

return float3(lhs[0]+rhs[0], lhs[1]+rhs[1], lhs[2]+rhs[2])

}

func += (inout lhs: float3, rhs: float3)

{

lhs = lhs + rhs

}

func - (lhs: float3, rhs: float3) -> float3

{

return float3(lhs[0]-rhs[0], lhs[1]-rhs[1], lhs[2]-rhs[2])

}

func -= (inout lhs: float3, rhs: float3)

{

lhs = lhs - rhs

}

func / (lhs: float3, rhs: float3) -> float3

{

return float3(lhs[0]/rhs[0], lhs[1]/rhs[1], lhs[2]/rhs[2])

}

func /= (inout lhs: float3, rhs: float3)

{

lhs = lhs / rhs

}

func * (lhs: float3, rhs: float3) -> float3

{

return float3(lhs[0]*rhs[0], lhs[1]*rhs[1], lhs[2]*rhs[2])

}

func *= (inout lhs: float3, rhs: float3)

{

lhs = lhs * rhs

}

func * (lhs: float3, scalar: Float) -> float3

{

return float3(lhs[0]*scalar, lhs[1]*scalar, lhs[2]*scalar)

}

func *= (inout lhs: float3, rhs: Float)

{

lhs = lhs * rhs

}

func == (lhs: float3, rhs: float3) -> Bool

{

return (lhs[0]==rhs[0] && lhs[1]==rhs[1] && lhs[2]==rhs[2])

}

func != (lhs: float3, rhs: float3) -> Bool

{

return !(lhs == rhs)

} |