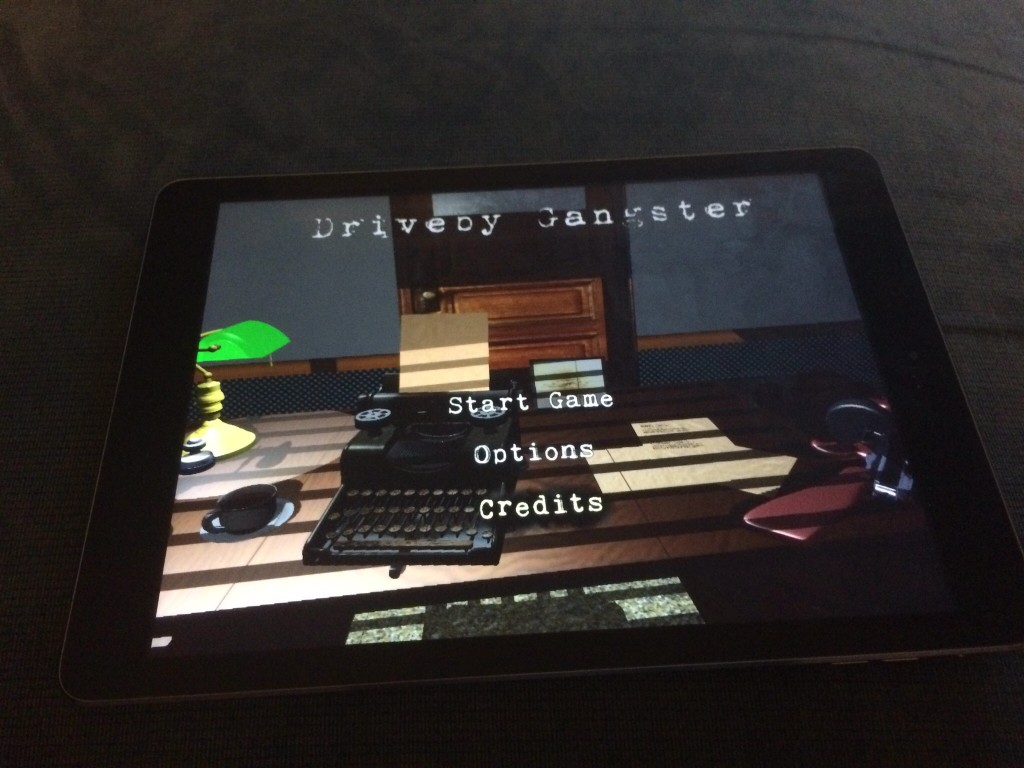

DG on Mobile

After putting roughly 6 months into this project, it seemed stupid not to go the extra mile and release this game for iOS if it was possible to be ran on it. So that’s what I spent the last few weeks doing, and I eventually reached success (I submitted it to the store for review this weekend). Disclaimer, much of this post was written using voice dictation so it might not flow as well as a typed document

What it took

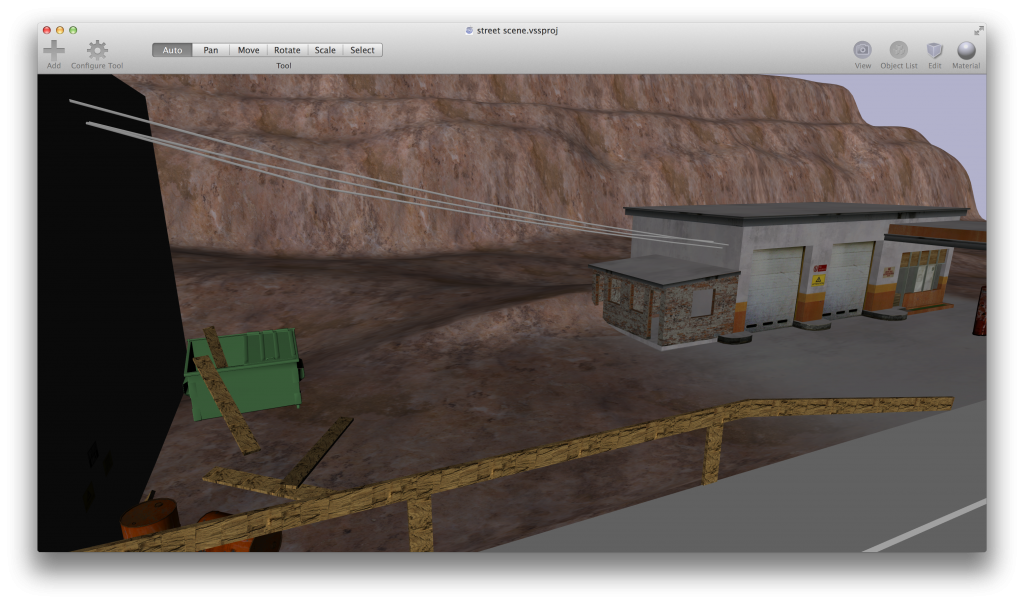

Now even though mobile was in the back of my mind, this game was never really designed to run on mobile. The final desktop game took up roughly 1 GB of disk space and 1 gigabyte of RAM running which would slowly rise to 4 GB over time which I suspected and later confirmed to be caused by memory leaks.

To get the game to run on my iPhone 5s, The first thing I did was make some minor modifications to the graphics engine code base to support running OpenGL 3.2 code on an iOS device. The easiest way to do this, was to simply target the OpenGL ES 3.0 API. The differences between OpenGL 3, and GLES 3.0 are so minor, that this was the best bet that I had for an effortless port of the game. This sure beat the hell out of the alternative which was to laboriously rewrite my dozens of now-forked shaders in GLSL for OpenGL ES 2.0. The downside to this however, was that I basically was cutting off any iOS device that could not run OpenGL ES 3.0 (pre-iPhone 5s, pre iPad air). I still decided to go for it despite all this.

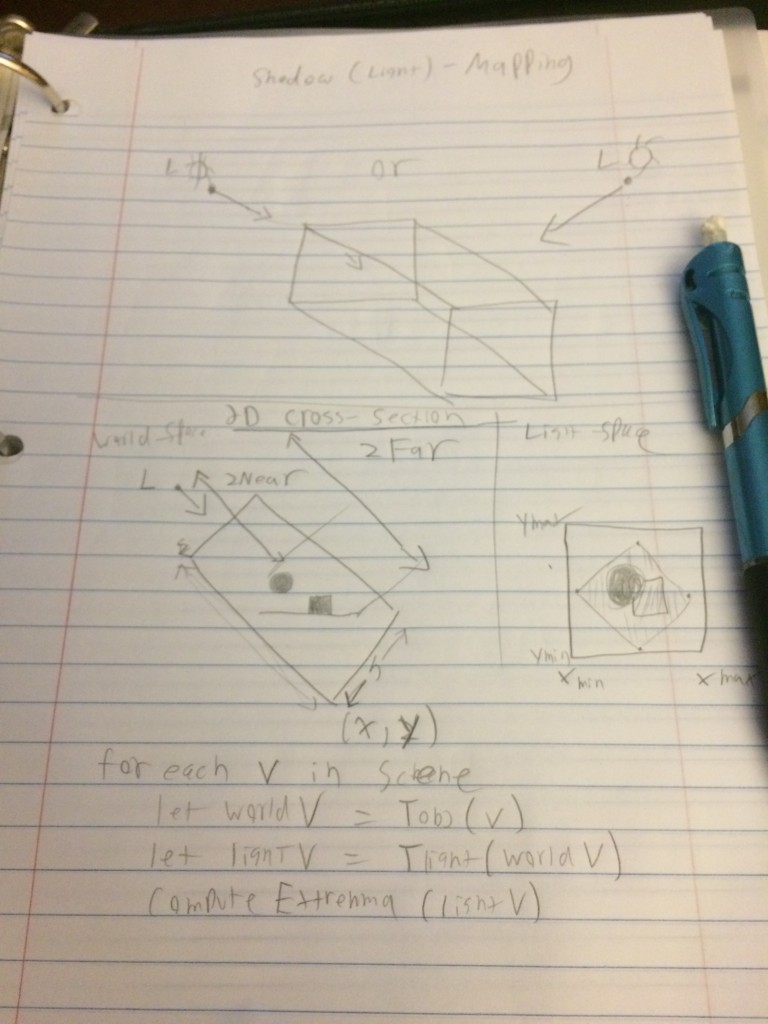

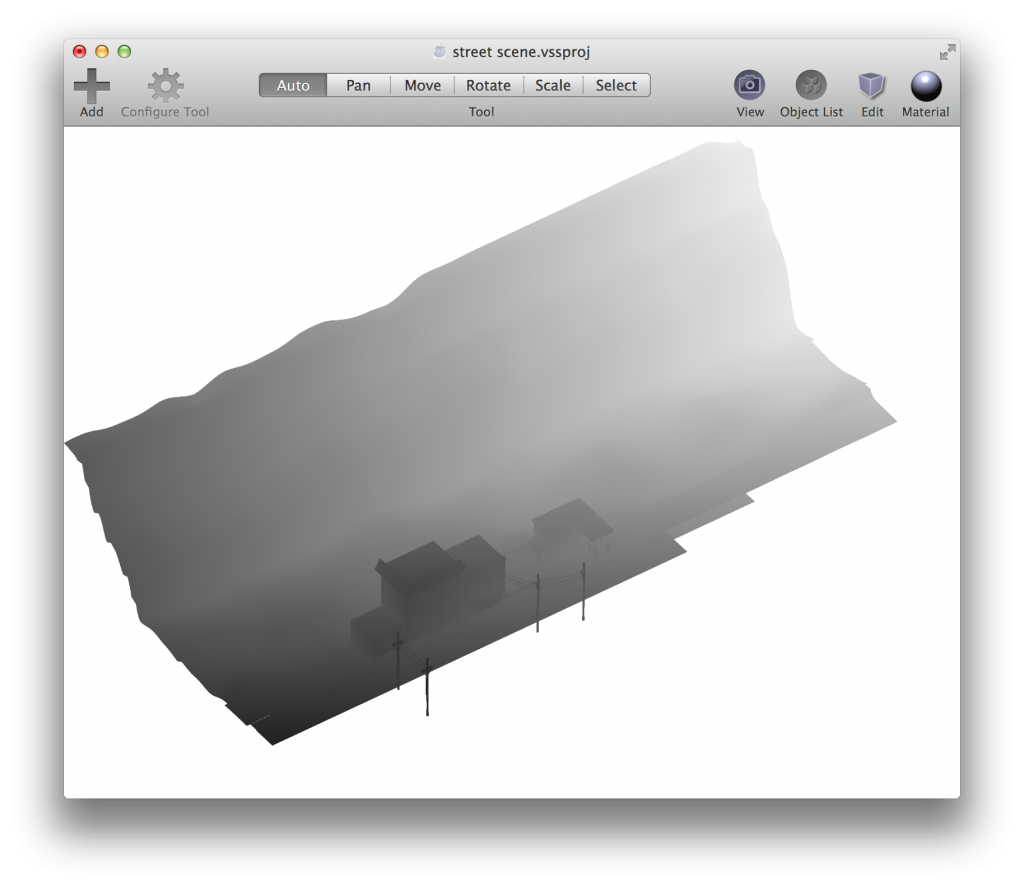

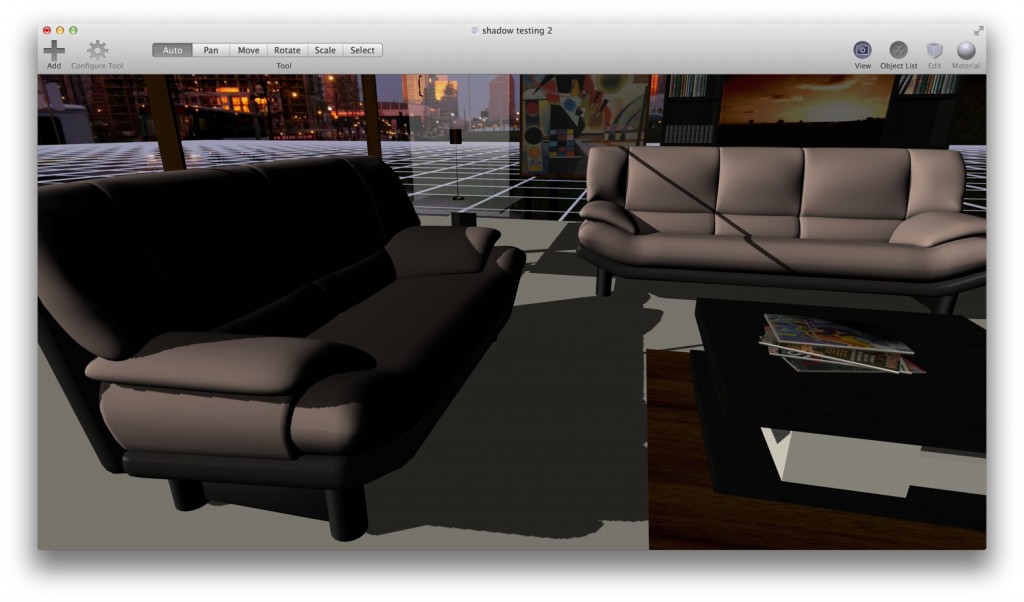

OpenGL ES 3.0 supported so many things that I needed for my game (such as multiple render targets, shadow maps, occlusion queries, etc) that the whole process worked out really well. Things that used to be near impossible to do on mobile OpenGL were now surprisingly easy to accomplish.

Still, there were some serious challenges that I had to overcome

What sucked and needed fixing

Off the bat, the most serious and challenging problem presented itself almost immediately after running a few levels of the game on my iPhone: RAM consumption. Almost immediately, loading the first level resulted in memory warnings and the eventual memory crash caused by allocating too much RAM on the device (this equates to roughly 512 MB on an iPhone 5s). This almost made me cancel the idea immediately thinking that there’s no way the game could fit as designed on a mobile device. When I ran the instruments tool, A utility that comes with Xcode to help you trace allocations and such, I discovered that my sound effects alone were taking up well over 100 megs of RAM. I also discovered that the heap was growing at an alarming rate between level reloads resulting in the eventual memory crash. Other assets such as textures and animation data were taking up another hundred megabytes or more (GPU memory is not easily trackable on an iOS device so I could only speculate how much the standard high-resolution desktop textures were taking up).

I used the invaluable “generations” tool of the instruments utility to track down an eliminate my biggest causes of memory leaks. The funniest one I can remember is the silent failure of the OpenAL alDeleteBuffers call resulting in the leak of ALL sound effects used in the game, including the very large “radio music” buffer which held roughly 1+ minutes of music audio. Leaking this was wasteful on desktop, but downright devastating on mobile. Discovering that I had to dequeue the auduo buffer first, solved that issue. Other stupidities such as bad pointer casting and issues related to C++ 11 smart pointer retain cycles I counted for the other memory leaks.

Apart from the memory leaks, there was a very serious issue that almost crippled the release of the entire game on mobile. This one was not my fault, and it’s related to a arcane bug in the iOS OpenGL 3.0 driver and how it handles OpenGL occlusion queries. Leave it to me to always find GL driver bugs in just about every platform I target for my games. This particular bug caused repeated usage of occlusion queries to eventually crash the whole app inside the driver code. I frustratingly posted this on the Apple developer forums, and got one of the engineers (one of the awesome engineers) to track down the issue and provide a workaround for me which fix the issue. For those that are curious, the workaround was to run the occlusion queries without an active color buffer attached to the default frame buffer object, bypassing the issue that leads to the crash.

Fixing all these issues however, still would not of been enough to get the game to run on iOS

Optimizations for mobile

To get the game to work “fit” on my iPhone 5s and iOS in general, I had to make the following optimizations

- UX/controls – The game needed to support a touchscreen interface. I accomplished this by adding various touch regions for things like the aiming (touchpad on the left side of the screen), shooting (lower right hand corner of the screen), and vehicle acceleration/deceleration (tilting the device).

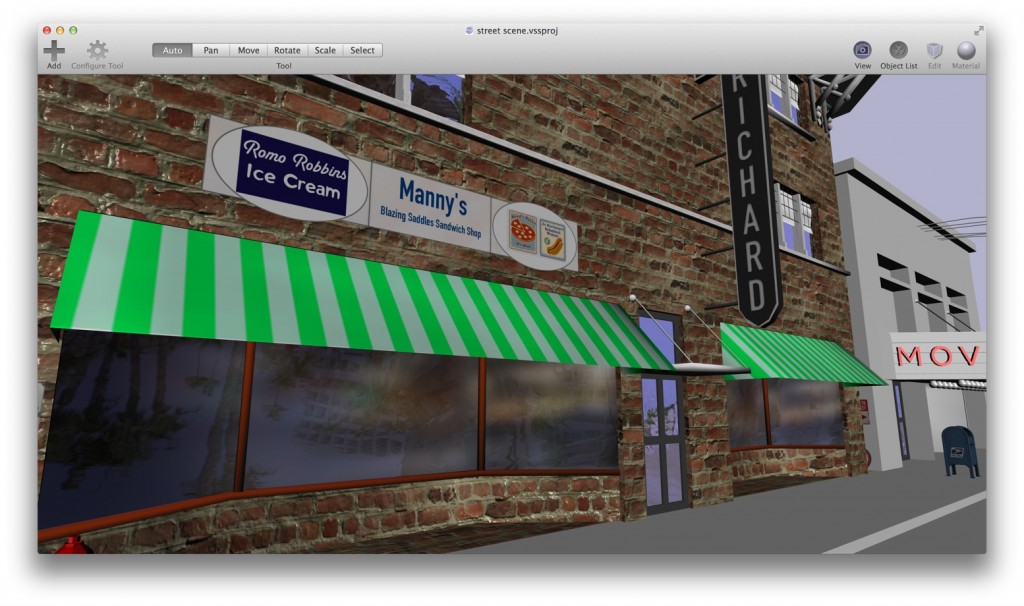

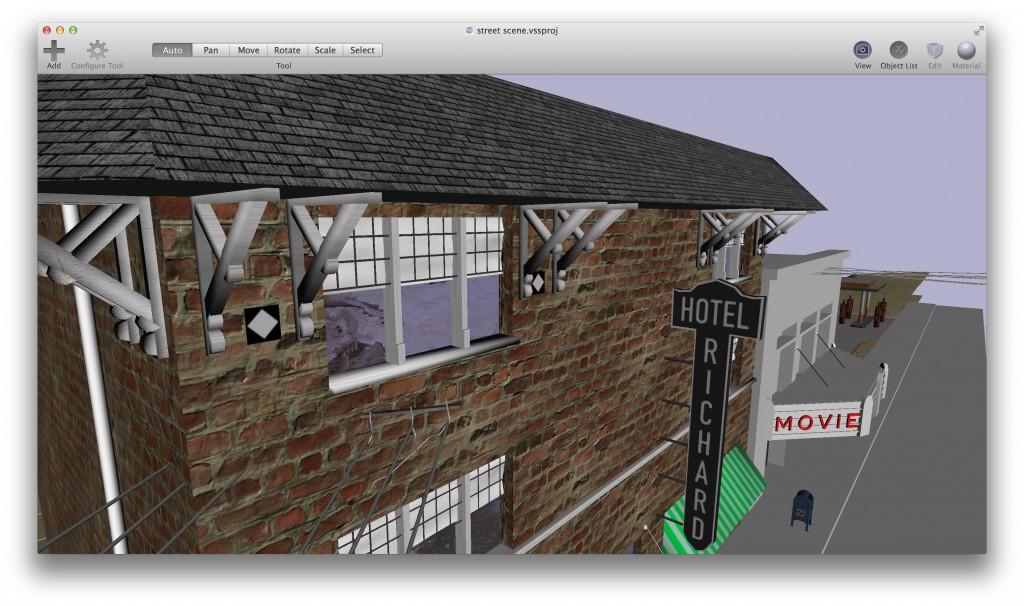

- Compressed reduced-resolution textures – this was probably the biggest deal. I took advantage of the OpenGL ES 3.0 standard ETC2 compression to pre-compress all textures to this special format. This allowed me to drastically reduce both the ram and disk space consumption of the texturea for the game. It also drastically decreased the amount of time it took for the scenes to load, offsetting the load delays caused by the slower CPU on my iPhone.

- Shortened sound effects – I truncated very long sound effects such as the radio music which were loaded as OGG files. I reduced these from over one minute to roughly 30 second loops at the longest, drastically reducing the amount of memory taken up by the audio in RAM. The reason why the sound effects have to exist as full audio buffers has to do with the real-time effects that I apply to them using Open AL in the game.

- Gzipped scene metadata – despite already using a binary XML format to store the actual scene data, I found that the disk space usage of all of my scenes was still very high and I could not expect users to download a 1 GB app on iOS. I fix this by gzipping the project files and live-decompressing them on load (using C++ gzipped file streams). Despite this adding a very slight CPU performance hit on load, it was worth it for the reduced app size – which I ultimately got down to roughly 500 MB.

- Shader optimization – because many of the game’s shaders were written for a far more powerful desktop GPU, I had to make optimizations such as reducing precision in some areas and disabling more expensive effects in the game to account for the slower GPUs on mobile. I also added an option to the game’s main menu to disable the retina display on certain devices and even made this the default on devices such as the iPad Air first-generation.

I also added support for MFI game controllers, a supported API by iOS 7 and above to allow players with physical game pads to get the best gaming experience possible. This was not a big stretch considering the original game was designed for physical buttons in the first place.

After doing all of this crazy stuff, I finally got the game to run reliably without crashing on my iPhone 5s and iPad air.

Victory.